The last two days were a lot of fun. Yesterday I went to Toronto for a meeting with ATI mobile team. The trip itself was pretty entertaining. I have a lot of things on my schedule so just five days ago I managed to give a definite answer to the question of whether I can come to Toronto. US Airways likes to overbook their flights, so after getting to the airport at 9am yesterday I found out that I can't get on my flight to Buffalo because it was full. I was supposed to meet up with David in Buffalo so we had to quickly change our plans and I took a flight directly to Toronto. Once I got there David and I ate lunch at a very nice Indian place and went to meet ATI guys. We talked about OpenVG, OpenGL ES and their mobile lineup. Getting to meet those guys was a lot of fun and the discussion was just great. Very productive for me. At the end we all went to grab dinner at a place called 'Spicy Momma'. That's one hell of a name for a restaurant! They're definitely not false advertising too (referring to the "spicy" not "momma" part). Two of the guys from the ATI team was vegetarian so we were able to team up and get lots of vegie food.

Since we haven't booked a hotel, after dinner we had to go and look for a place to stay. We found a hotel fairly quickly. We also got some cookies from the girl working at the reception desk (she baked them herself and in this case that was a good thing) and my "happy life" receipt has 'free cookies' very high up on the list, so that made me pretty happy =)

Today the weather was wonderful so we stopped on our way back at the Niagara falls and decided to eat lunch at a place with a great view. It's one of those places that just makes you feel better (even being a city boy myself). Fun.

Thursday, May 25, 2006

Monday, May 22, 2006

Improving reality

Historically there has been a big gap between real world and computer interfaces. Things behave one way in real world and our desktop applications behave another. I'd like to see us moving to some extend away from that. I've been talking about physics simulations becoming more widespread on the desktop last summer and ever since I went through a lot of them. Over the weekend I started looking into moving to a real physics engine. Open Dynamics Engine is, as far as I know, the best one. Problem there is that for desktop apps cloth, rope and water simulations are way more useful and currently it, still, takes too much effort to mimic them.

I'd just like to have a model, where moving from reality to the computer interface doesn't involve much of a mental switch. Or at least it's very natural. I'm not saying that it should behave exactly like something out there but it needs to be a lot more dynamic than what we have right now. Which is really, what we refer to when we talk about "organic interfaces".

There are some things that are spot on, that improve on what you normally get in real life and make computers act as what they really are, tools. Yesterday I was writing something by hand, thinking "man, this would be so much easier with a spell checker". You know you got something right, if you really miss it while performing a similar activity outside the computer world.

Talking about spell checking I played a bit with Sonnet over the weekend. I've been handling KSpell, then KSpell2 for a while and then I just grew tired of it last summer (for various reasons not really related to the code itself). I've been toying with the idea of full linguistic framework for a while. Besides spell checking we're talking about grammar checker, dictionary, thesaurus and translator. Sonnet is just that - full linguistic framework. I'd like to have all those functions available to all KDE applications. Being able to take a step back, look at all the problems I've seen and complains I got over the years from both users and developers and just sit down and rework the whole framework to fix them is great. Linguistics is fascinating and for some reasons there's not a whole lot of people who'd want to deal with it, at least not as far as its desktop usage goes.

I'd just like to have a model, where moving from reality to the computer interface doesn't involve much of a mental switch. Or at least it's very natural. I'm not saying that it should behave exactly like something out there but it needs to be a lot more dynamic than what we have right now. Which is really, what we refer to when we talk about "organic interfaces".

There are some things that are spot on, that improve on what you normally get in real life and make computers act as what they really are, tools. Yesterday I was writing something by hand, thinking "man, this would be so much easier with a spell checker". You know you got something right, if you really miss it while performing a similar activity outside the computer world.

Talking about spell checking I played a bit with Sonnet over the weekend. I've been handling KSpell, then KSpell2 for a while and then I just grew tired of it last summer (for various reasons not really related to the code itself). I've been toying with the idea of full linguistic framework for a while. Besides spell checking we're talking about grammar checker, dictionary, thesaurus and translator. Sonnet is just that - full linguistic framework. I'd like to have all those functions available to all KDE applications. Being able to take a step back, look at all the problems I've seen and complains I got over the years from both users and developers and just sit down and rework the whole framework to fix them is great. Linguistics is fascinating and for some reasons there's not a whole lot of people who'd want to deal with it, at least not as far as its desktop usage goes.

Wednesday, May 17, 2006

Random thoughts

I just remembered that I never mentioned how great Brazil was. And I definitely have to mention the fact that I've been taught how to play "Svn Trunk" which is the best card game I've ever seen. I believe the game is known as "Trucu" in Brazil, but since I've learned it, it's now internationalized and it's been renamed ;) For KDE 4 I'd like to have a common card canvas that things like KPat and in future "KDE SVN Trunk" can share (with some really great winning animations because unless there's a neat animation at the end, winning is pointless).

I'd like to make card decks stylable via SVG as well.

See this?

If that's not the proof that I'm the greatest international Trucu player I don't know what is...

Oh, and today my softrucks arrived. It's been raining a bit lately and I have too many skateboards around to not be able to break something daily on one of them. I'll sit down later tonight to install them on a board and will give it a spin. So if you won't see anything interesting happening graphics wise in Qt/KDE/X in the near future it's because Softrucks don't work that well and I'm most likely in a hospital =)

On a sidenote, a new version of Jahshaka (repeat quickly four times for an additional point bonus) has been released. That application is very impressive.

I'd like to make card decks stylable via SVG as well.

See this?

If that's not the proof that I'm the greatest international Trucu player I don't know what is...

Oh, and today my softrucks arrived. It's been raining a bit lately and I have too many skateboards around to not be able to break something daily on one of them. I'll sit down later tonight to install them on a board and will give it a spin. So if you won't see anything interesting happening graphics wise in Qt/KDE/X in the near future it's because Softrucks don't work that well and I'm most likely in a hospital =)

On a sidenote, a new version of Jahshaka (repeat quickly four times for an additional point bonus) has been released. That application is very impressive.

Monday, May 15, 2006

Precision

One of the most difficult problems of computer graphics today is producing truly robust algorithms. Working in floating point coordinate system introduces a lot of subtle problems. Computational geometry approaches the problem from two directions:

1) Implementing algorithms on top of rational numbers.

2) Introducing rounding phases to algorithm, usually in a post processing stage.

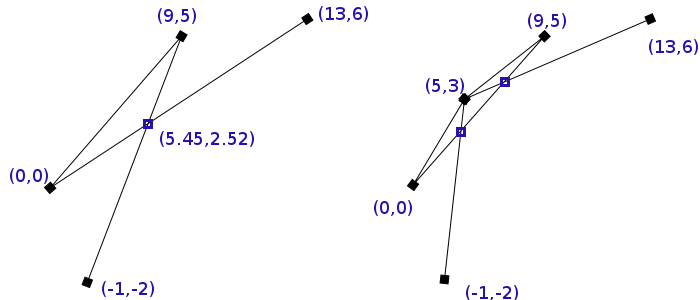

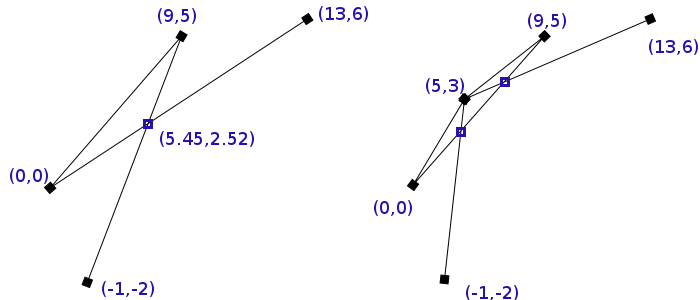

For example lets look at a pretty popular example, based on the one from Hobby's paper. In this example we have three line segments with one intersection between them. Now if we round up the intersection to integer coordinates two new intersections are introduced - ones that are completely incorrect. Although we don't round up that heavily the following example scales into infinity as you uniformly divide coordinates by the same number.

In this example we have three line segments with one intersection between them. Now if we round up the intersection to integer coordinates two new intersections are introduced - ones that are completely incorrect. Although we don't round up that heavily the following example scales into infinity as you uniformly divide coordinates by the same number.

There are two main reasons why this behavior is so undesirable for graphics.

1) It produces obviously incorrect results.

2) Optimized algorithms often break down due to those inconsistencies.

The first one is actually less important - on this scale the degeneration of our results is likely to produce errors that won't have too significant effects on what you see on the screen. Two is a lot more severe. For example a lot of the more popular geometrical algorithms includes a step of finding segments containing a point. Assuming that a segment has floating point coordinates and the point is, as well, in floating point coordinates - the containment test will very quickly break down as the limited precision will hit the computations. So there's no way to reliable perform that test, due to which all those algorithms will simple fail. As a result, an incredibly small rounding error will likely cause a complete failure of an algorithm and because of that small error you won't see any results on the screen. That's a serious problem.

Going back to the solutions to this problem. John Hobby wrote a paper entitled "Practical Segment Intersection with Finite Precision Output". Algorithm which he introduced in that paper has since been named "snap rounding". Numerous papers have been written since about this algorithm, some of the better ones include "Iterated Snap Rounding" by Halperin and Packer and "Snap Rounding Line Segments Efficiently in Two and Three Dimensions" by Goodrich and Guibas. The basic principle of this algorithm is very simple; every event point is computed within some tolerance square. Every tolerance square has its middle computed and all events that we encounter, whose coordinates fall withing already existing tolerance square are "bent" in a way that changes their coordinate to be equal to the middle of the tolerance square. This technique works surprisingly well.

The second method, often employed by professional grade geometrical software, involves replacing float/double by a custom "rational" number class. They produce mathematically correct results, which is ideal. The reason why we can't really even consider them is that they're about 30+ times slower than doubles.

Now the reason I went on this rant is that I've been testing results of my new algorithm for rasterizing polygons, which involves decomposition, and even though on paper the algorithm was always correct and the unit tests were all passing, the algorithm was falling apart in some cases. I've spent a while going through my intersection detection code, snap rounding implementation and couldn't find anything. Then I looked at some of the data files I had and they were only in limited precision of .001 which is not even close enough to being precise enough for floating point intersections/coordinate systems. Feel my pain =)

1) Implementing algorithms on top of rational numbers.

2) Introducing rounding phases to algorithm, usually in a post processing stage.

For example lets look at a pretty popular example, based on the one from Hobby's paper.

In this example we have three line segments with one intersection between them. Now if we round up the intersection to integer coordinates two new intersections are introduced - ones that are completely incorrect. Although we don't round up that heavily the following example scales into infinity as you uniformly divide coordinates by the same number.

In this example we have three line segments with one intersection between them. Now if we round up the intersection to integer coordinates two new intersections are introduced - ones that are completely incorrect. Although we don't round up that heavily the following example scales into infinity as you uniformly divide coordinates by the same number.There are two main reasons why this behavior is so undesirable for graphics.

1) It produces obviously incorrect results.

2) Optimized algorithms often break down due to those inconsistencies.

The first one is actually less important - on this scale the degeneration of our results is likely to produce errors that won't have too significant effects on what you see on the screen. Two is a lot more severe. For example a lot of the more popular geometrical algorithms includes a step of finding segments containing a point. Assuming that a segment has floating point coordinates and the point is, as well, in floating point coordinates - the containment test will very quickly break down as the limited precision will hit the computations. So there's no way to reliable perform that test, due to which all those algorithms will simple fail. As a result, an incredibly small rounding error will likely cause a complete failure of an algorithm and because of that small error you won't see any results on the screen. That's a serious problem.

Going back to the solutions to this problem. John Hobby wrote a paper entitled "Practical Segment Intersection with Finite Precision Output". Algorithm which he introduced in that paper has since been named "snap rounding". Numerous papers have been written since about this algorithm, some of the better ones include "Iterated Snap Rounding" by Halperin and Packer and "Snap Rounding Line Segments Efficiently in Two and Three Dimensions" by Goodrich and Guibas. The basic principle of this algorithm is very simple; every event point is computed within some tolerance square. Every tolerance square has its middle computed and all events that we encounter, whose coordinates fall withing already existing tolerance square are "bent" in a way that changes their coordinate to be equal to the middle of the tolerance square. This technique works surprisingly well.

The second method, often employed by professional grade geometrical software, involves replacing float/double by a custom "rational" number class. They produce mathematically correct results, which is ideal. The reason why we can't really even consider them is that they're about 30+ times slower than doubles.

Now the reason I went on this rant is that I've been testing results of my new algorithm for rasterizing polygons, which involves decomposition, and even though on paper the algorithm was always correct and the unit tests were all passing, the algorithm was falling apart in some cases. I've spent a while going through my intersection detection code, snap rounding implementation and couldn't find anything. Then I looked at some of the data files I had and they were only in limited precision of .001 which is not even close enough to being precise enough for floating point intersections/coordinate systems. Feel my pain =)

Thursday, May 11, 2006

The basics

If you look at the frameworks we have and the way 2D graphics is heading, it's obvious everything we render is really a polygon. Rendering any other primitives is more an optimization than anything else. There's a few reasons for that. One of the more important ones is the complexity that is introduced with stroking (I'd crack a joke about difficulties of stroking here but lets skip that). For example lets look at the rectangle underneath. We're using a linear gradient stroke on it:

What should be obvious is that it's actually two shapes. We have the fill but we also have the stroke

but we also have the stroke  . Here be dragons, because the stroke is not a rectangle anymore! It's a polygon with hole! So rendering this seemingly simple shape suddenly becomes rather complex. Now a lot of people will notice that a simple hack could be to draw two rectangles

. Here be dragons, because the stroke is not a rectangle anymore! It's a polygon with hole! So rendering this seemingly simple shape suddenly becomes rather complex. Now a lot of people will notice that a simple hack could be to draw two rectangles

as in : first and then

and then  with the ending result being

with the ending result being  . Then of course you need to special case because if the fill has an alpha you will end up with

. Then of course you need to special case because if the fill has an alpha you will end up with  which is cleary incorrect as now the fill seems to have a gradient... Further more if we're overlaying shapes that optimization is right out. So on this extremely simple example we can see that special cases are accumulating very quickly and the code gets really convoluted very quickly. The question becomes whether making the code so ugly and error prone (especially as the number of conditions which affect what kind of rendering path the code takes, accumulates) is a good trade of for small optimizations.

which is cleary incorrect as now the fill seems to have a gradient... Further more if we're overlaying shapes that optimization is right out. So on this extremely simple example we can see that special cases are accumulating very quickly and the code gets really convoluted very quickly. The question becomes whether making the code so ugly and error prone (especially as the number of conditions which affect what kind of rendering path the code takes, accumulates) is a good trade of for small optimizations.

Without the support for stroking and alpha-blending, things tend to be easy. Unfortunately the reality of the fact is that both are hard requirements for any decent rendering framework. So for all of you who've been wondering why the new rendering frameworks are slower than the code you were using so far to draw rectangles and lines, now you know. In Qt we try to make people happy by having all those special cases, by reverting back to using Xlib whenever possible and, of course, by improving the frameworks but it's just a lot of work. I'll try to go over some of the things I'm doing with graphics in Qt, KDE and X.

What should be obvious is that it's actually two shapes. We have the fill

but we also have the stroke

but we also have the stroke  . Here be dragons, because the stroke is not a rectangle anymore! It's a polygon with hole! So rendering this seemingly simple shape suddenly becomes rather complex. Now a lot of people will notice that a simple hack could be to draw two rectangles

. Here be dragons, because the stroke is not a rectangle anymore! It's a polygon with hole! So rendering this seemingly simple shape suddenly becomes rather complex. Now a lot of people will notice that a simple hack could be to draw two rectanglesas in : first

and then

and then  with the ending result being

with the ending result being  . Then of course you need to special case because if the fill has an alpha you will end up with

. Then of course you need to special case because if the fill has an alpha you will end up with  which is cleary incorrect as now the fill seems to have a gradient... Further more if we're overlaying shapes that optimization is right out. So on this extremely simple example we can see that special cases are accumulating very quickly and the code gets really convoluted very quickly. The question becomes whether making the code so ugly and error prone (especially as the number of conditions which affect what kind of rendering path the code takes, accumulates) is a good trade of for small optimizations.

which is cleary incorrect as now the fill seems to have a gradient... Further more if we're overlaying shapes that optimization is right out. So on this extremely simple example we can see that special cases are accumulating very quickly and the code gets really convoluted very quickly. The question becomes whether making the code so ugly and error prone (especially as the number of conditions which affect what kind of rendering path the code takes, accumulates) is a good trade of for small optimizations.Without the support for stroking and alpha-blending, things tend to be easy. Unfortunately the reality of the fact is that both are hard requirements for any decent rendering framework. So for all of you who've been wondering why the new rendering frameworks are slower than the code you were using so far to draw rectangles and lines, now you know. In Qt we try to make people happy by having all those special cases, by reverting back to using Xlib whenever possible and, of course, by improving the frameworks but it's just a lot of work. I'll try to go over some of the things I'm doing with graphics in Qt, KDE and X.

Subscribe to:

Posts (Atom)