In the basic case, meaning simple polygons, tessellation is trivial and there are papers describing techniques to do in linear time like this or this. The problem is that most polygons that we currently decompose are not simple. Self-intersecting polygons with holes are very common and that's where most of our algorithms falls apart.

There are two papers describing handling such complex polygons: An implementation of a Near-Linear Polygon Triangulation Algorithm for General Polygons and

FIST: Fast Industrial-Strength Triangulation of Polygons. The detail that is usually not mentioned is that handling self-intersecting polygons in those algorithms has severe effects on their complexity (from nearly-linear to n^2*log*n).

Which leads to trivial conclusion #1: if tessellation of simple polygons is almost linear then would it be faster to unwind complex polygons and tessellate created this way simple polygons rather than tessellate directly one complex polygon. The only challenging aspect of unwinding polygons is finding the points where the polygon self-intersects. As a side-effect we venture into a lot better researched part of computational geometry - segment intersections. We can apply Bentley&Ottmann algorithm between the edges of a polygon and mix it with any of the snap-rounding techniques to try to handle precision problems.

Now this operates in nlogn but the catch is that when rendering large number of simple non-self-intersecting shapes we do a whole lot of work for absolutely nothing.

That in turn leads to conclusion #2: when rendering large amounts of simple primitives our fancy tessellation algorithm falls apart because it does a lot of work for absolutely nothing. Algorithmically there's nothing one can do here, you have to have a little bit of higher-level knowledge that can help you figure out whether the following primitive to be rendered is simple or not.

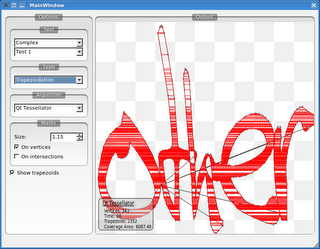

And now my latest thorn: when rendering complex polygons we generate a lot of trapezoids (due to the fact that almost every vertex forces generation) which after rasterization all fit within one scanline (happens very often when rendering on a big viewport mapped to a small window). So when the server is rasterizing those trapezoids it does a lot of work for nothing. The horizontal red spans in the following image show trapezoids which visually won't add anything to the rendering (after rasterization of them that is).

I'm still a little bit torn as to at which step should merging of those trapezoids actually happen.

4 comments:

Here's the basics of an algorithm I've been playing with. Break the image up into its set of lines and curves. Put each of these into a rectangle. In the rectangle you color half the image and the other half is transparent. Use shaders to draw the curves. Each of the rectangles is assigned a depth. By controlling the order that rectangles paint and their depth values you can paint the desired imaged. If you don't have shaders implement then in software by manipulating a texture.

Here's the basics of an algorithm I've been playing with. Break the image up into its set of lines and curves. Put each of these into a rectangle. In the rectangle you color half the image and the other half is transparent. Use shaders to draw the curves. Each of the rectangles is assigned a depth. By controlling the order that rectangles paint and their depth values you can paint the desired imaged. If you don't have shaders implement then in software by manipulating a texture.

From what I understand snap-rounding is not robust and will create a lot of problems on it's own.

Triangulation is not a trivial problem Zack ;) I have dozen of test cases and stress tests that have broken my nights, and as you can see a tessellator coder has never finished to do his job :)

Trapezoidation algorithms aren't so good, because they don't produce the optimal (minimum) number of triangles. FIST doesn't handle correctly degenerative situations (anyway it ensures that a crash will never happen, but the results would be incorrect in those cases).

Fortunately, after a long research i ended up with a very good tessellator, included in AmanithVG.

Keep the ball rolling Zack :)

Post a Comment